Overview

One might concisely define AI as human mimicry, so it is no wonder that designers tend to represent AIs as virtual beings with names, life-like avatars, and even voices. Embodiment is the term for these tangible human-like features given to AI, though embodiment carries both opportunities and drawbacks. Embodied technologies are rapidly proliferating, with the now-ubiquity of voice assistants, chat-bots, and (perhaps soon) anthropomorphic robots. However, AI by no means requires, or even benefits from, embodiment. Instead, embodiment should be seen as a deliberate, intentional decision where benefits are weighed against a host of complex design challenges.

The Human Touch

Our society has a mythology around humans creating machines ‘in our own image’. Yet even on a more pragmatic level, designers tend to ascribe subjective human traits to their work—friendly, empathetic, inviting, trustworthy. Many of us want our everyday systems and services to have a ‘human touch’, so that everything from our HR department to our local sandwich shop can make every interaction as wonderful as possible.

However, we should not mistake these desires for a more humane world as desires for a world with more human-like machines. A virtual avatar does not make every technology more friendly, no matter how cute or playful it looks. After all, that virtual avatar will still have to represent a flawed product.

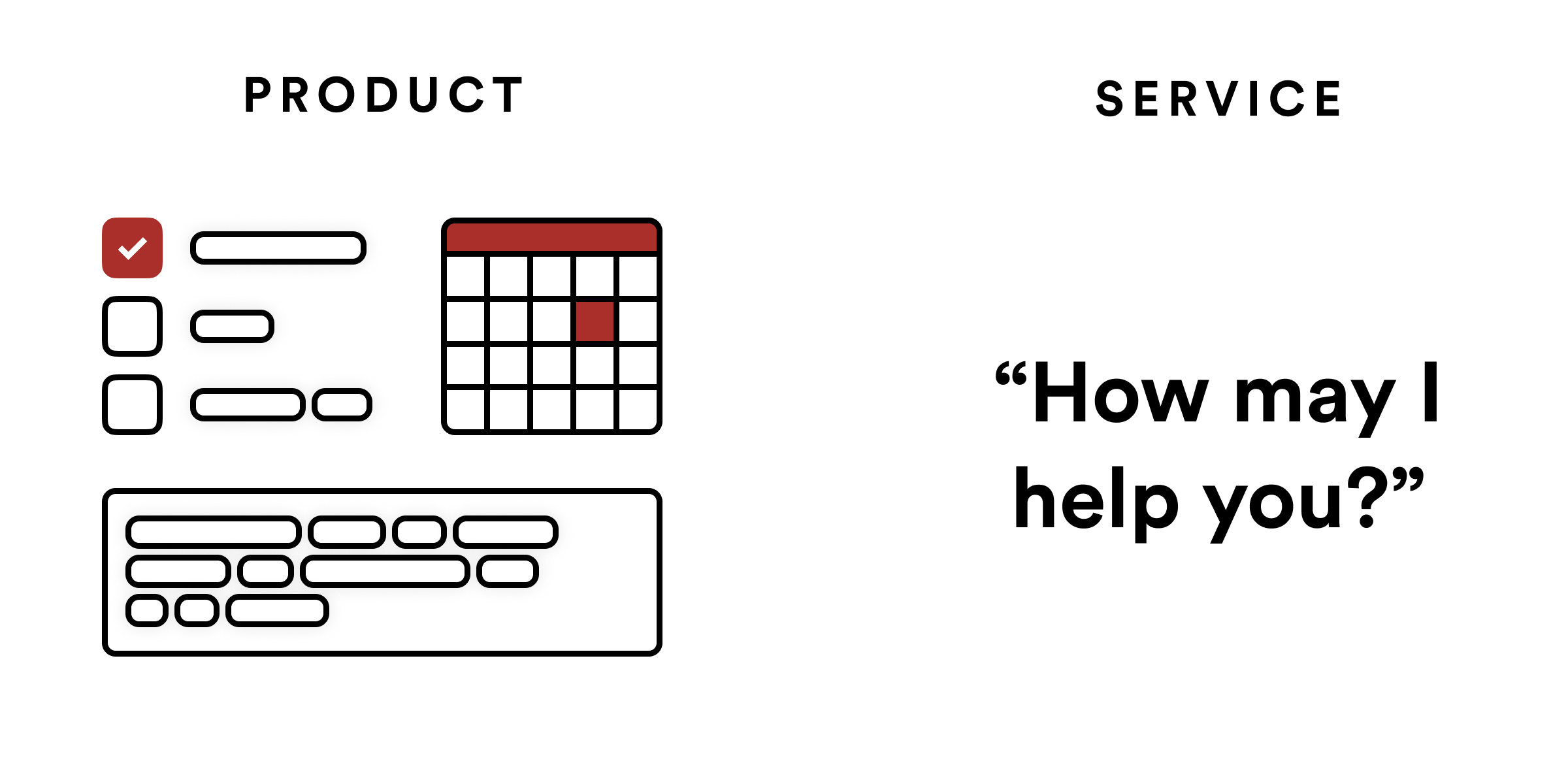

Products Versus Services

Are you designing a product, or a service? Embodiment often blurs this line. In a product, users expect a standardized thing, whereas in a service, users expect a customized and individualized solution. Services often require interfacing with a human who can answer questions or handle exceptions. Unfortunately, an embodied interface can make a product feel like a service, creating highly mismatched expectations for users. When the service delivers a pre-scripted behavior, users can become distrustful or confused. Some products even attempt to weave in a service experience by having a human agent magically replace the ‘bot’. However, designers should make sure that users receive an interaction that matches their expectations, or at least know when they are talking to a real human.

Human Interaction

When Embodiment Works

Embodiment works when it makes user interaction more seamless and accessible by reducing, rather than erecting, barriers. Voice assistants have done this to some success. Unlike the lifelessness of touching a phone’s rectangular pane of glass, human interaction is natural and intuitive. Imagine a first-time phone user attempting to listen to music on a modern smartphone. They would first have to decipher a litany of unfamiliar shapes and UIs before arriving at anything resembling a music listening experience. Instead, voice assistants allow users to make requests more naturally, without tapping or swiping through a new technology. Gesture-recognition may take this even further, reducing barriers for foreign speakers or sign-language users. Embodiment works when natural actions yield expected results that conform to our personal experiences.

Clarity and Obfuscation

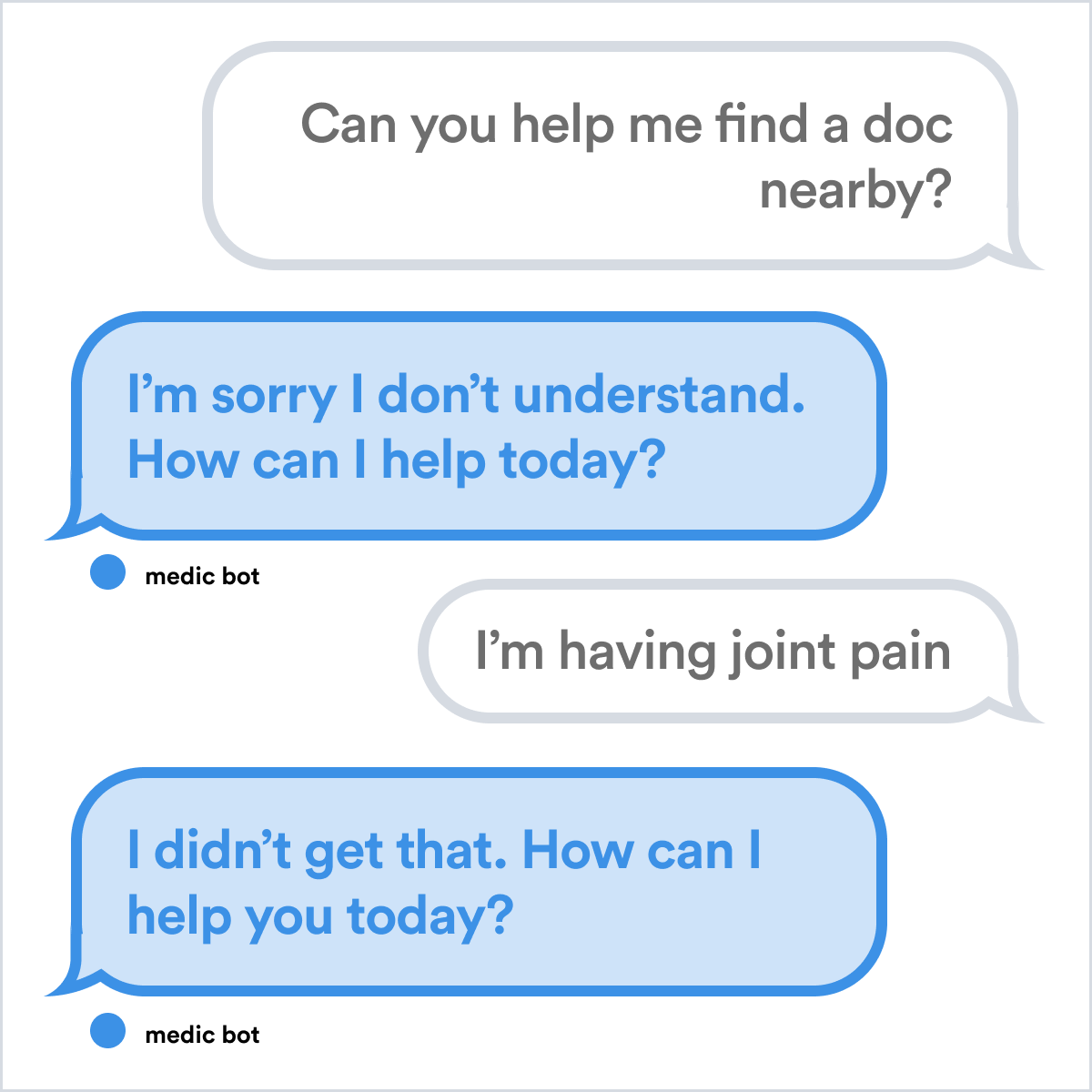

Unfortunately, embodiment often undermines its intended design. As humans, we hold a variety of expectations from other people, and we carry those expectations roughly to embodied technologies as well. Designing embodied technology may require you to make some challenging decisions about age, gender, and personality. It will encourage potentially awkward and embarassing behaviors (one of the most commonly uttered phrases to chatbots is “I love you”[1]). We expect anything speaking to us in natural language to have a wide range of conversational abilities, opinions on various subjects, a sense of humor, and even individual quirks. When those features do not exist, it ‘breaks the fourth wall’ and we stop interacting in a natural way. Without clear boundaries of what these systems are capable of, embodied AI will inevitably undermine our expectations.

The Unfriendly Valley

Unlike graphical user interfaces, conversational interfaces often lack a clarity of purpose. Users will remain unaware of the majority of the system’s capabilities. GUIs contain descriptive text, buttons, menus and other interactive elements that relate every capability to the user. In a conversational setting, users are made to rely on generic queries such as “what can you do?” or “how do you do X?” to find the system’s uses. Therefore, when designing a conversational agent, consider creative ways of conveying capabilities to a user, such as visual aids, tutorials, or other indicators (see Prototyping AI with Humans).

Individuality

Perhaps a common cause of embodiment’s overuse is the designer’s expectation that AI will ‘eventually’ begin to mimic humans extremely well. The media entrenches this belief by reporting optimistically on every new technology that it may finally replace human interaction.

However, consider whether your product even needs to be packaged in a human-like way at all. We call this bias towards embodiment the myth of individuality. Take the simple example of booking a hotel room. Perhaps you imagine designing a hotel-booking service as a virtual clerk who fields questions and concerns from the user. Of course, such a service may also be represented as an input form, where users can select dates and pick from available options as a list with checkboxes. But these two options are far from equivalent. Many user behaviors with a simple form become laborious in front of a virtual clerk. People often spend inordinate amounts of time fiddling with forms, finding date ranges and then narrowing in on their final choice. This would be akin to asking the clerk hundreds of questions, repeating oneself over and over, changing one’s mind mid-conversation. In this case, a self-service form with checkboxes might turn out to be more human-centered than a virtual human.

Design Questions

Considerations

Agentive Systems

Agents should be regarded as a separate kind of interface rather than a replacement for an in-person experience.

Default Conditions

Beware of default behaviors for agents, as they will appear highly disproportionately to any other behavior.

Self-Service vs. Counter Service

Determine whether your users would prefer ‘self-service’ or ‘counter-service’ interactions.

Expectation Management

Embodiment creates vast expectations for capabilities, as well as running the risk of uncanniness.

Internal Voice

It often helps to have an interface provide prompts through an ‘internal voice’ to avoid the design challenges of embodiment.

Product/Service Mismatch

Agentive tools can mistakenly turn a product experience into a service experience, where users expect the product to be delivered as a customized solution.

Further Resources

- Designing Agentive Technology by Chris Noessel

Footnotes

AI makes the heart grow fonder by Nikkei Asian Review ↩︎