Overview

Many of us expect AIs to be autonomous and highly independent. However, this couldn’t be further from the truth. Large-scale AI systems have a number of stakeholders that are passively or even actively involved. From users to data-labelers to reviewers to managers and everyone in between, every AI system must be ‘raised by a village’. The dynamics of an AI refers to its interactions with the world around it, as the system gains new information and updates its behavior over time. Designing for the dynamics of AI requires understanding the relationship between stakeholders and the system, as humans adapt to AIs and AIs in turn co-adapt as well.

Machine Learning Requires Humans

Stakeholders

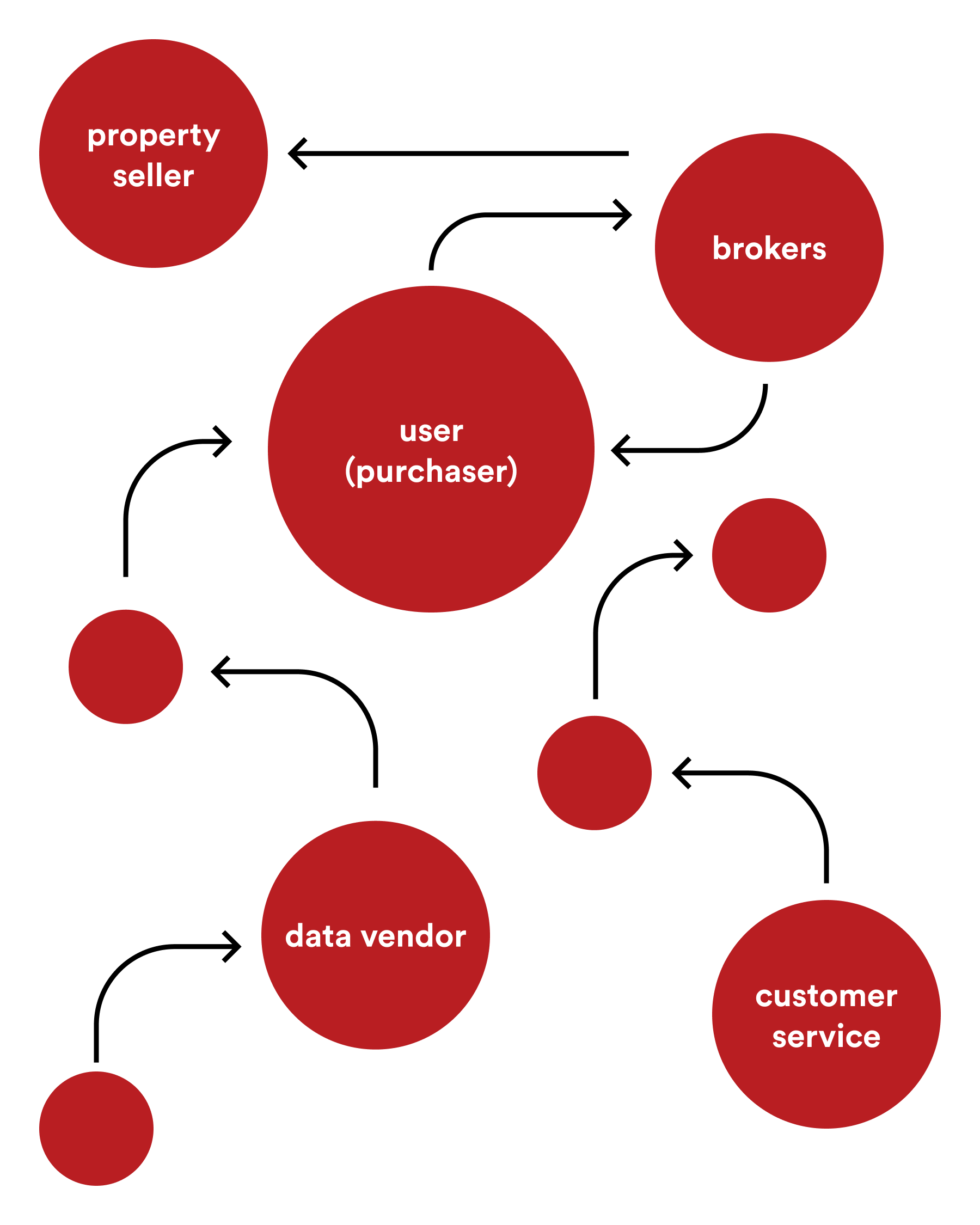

Designing for the complex and evolving dynamics of an AI system can be a daunting challenge. Instead of trying to untangle this complexity head-on, it helps to keep in mind that a human-centered AI should make people’s lives better. Therefore, your design process should include mapping out the various stakeholders of your system, who may be either directly or tangentially involved (see Observing Human Behavior). For example, in a real-estate price estimation app, stakeholders might include your users (buyers), brokers, data vendors, customer support personnel, etc. Describe their needs and expectations, as well as how they exchange value with your AI system.

Unusual feedback cycles may emerge in your AI’s dynamics with stakeholders. For example, an automated system may flag certain data as ‘inaccurate’ simply because the human data labelers are rounding numbers to a higher decimal. This may encourage the labelers to add arbitrary precision to their data points, which may create odd downstream behavior with the model. It is important to keep tabs on seemingly minute details like these in an AI system, as there are fewer humans to exercise common sense judgments at various stages.

Co-Adaptation

While AI systems leverage data on human behavior, those humans are also updating their behavior based on the AI. This duality, called ‘co-adaptation’ can have both beneficial and strange consequences. For example, a person may realize that their voice assistant recognizes certain names better if pronounced intentionally wrong (for example, someone named ‘A-J’ might be pronounced as ‘ah-jā’). If users adapt to this behavior by the AI, the system will end up learning a highly inaccurate model and may collect incorrect data for further training.

Designing Complex Systems

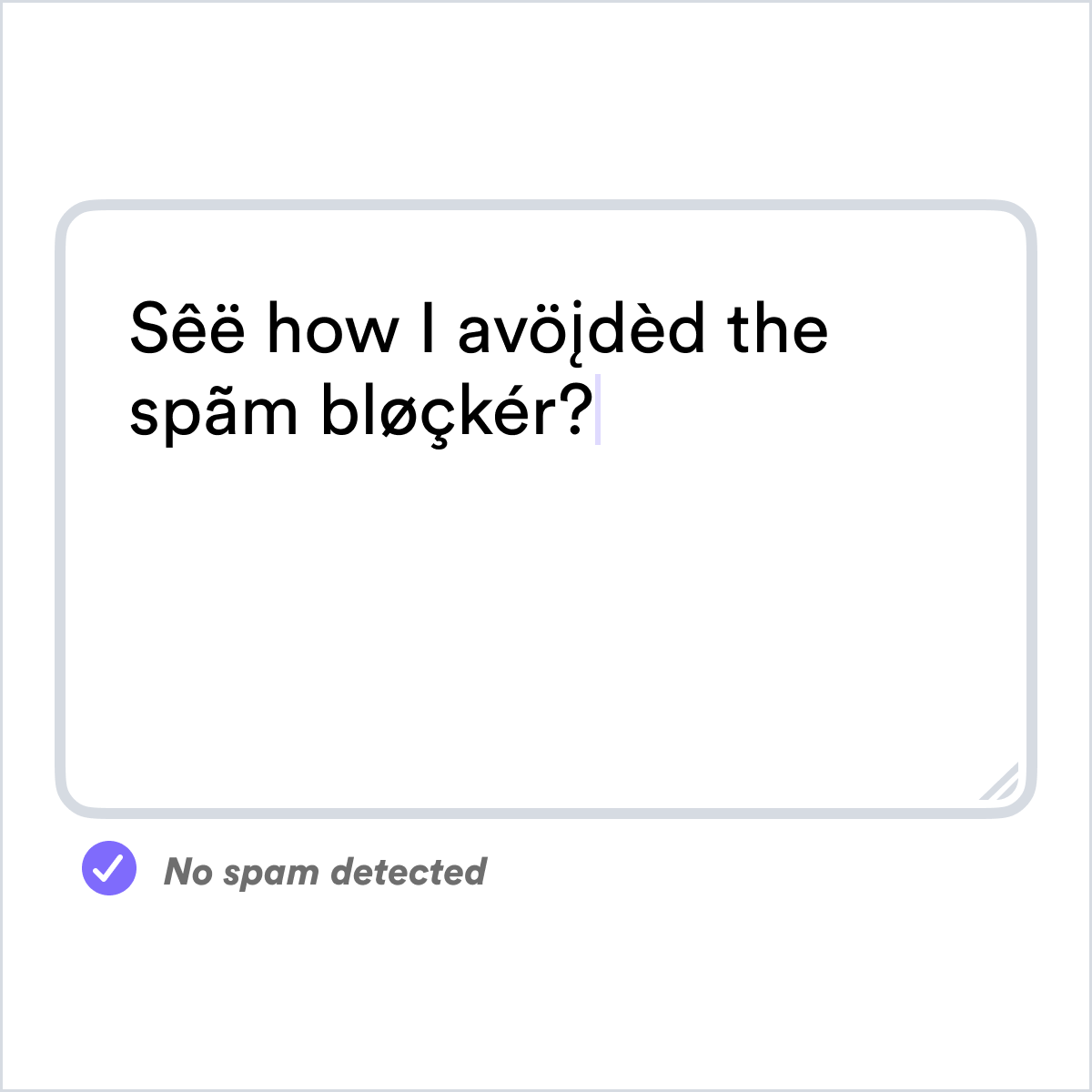

Attempting to control the dynamics of a large, complex AI may seem fruitless. Many of the behaviors of the system may be emergent, only addressable after-the-fact. Perhaps some more engineering-minded individuals would argue that such unintended outcomes may be solved by adding further complexity to the models, to make those models ever more nuanced in their understanding of context and situation. However, this is certain to add more unintended possibilities to the system. Instead, limit the complexity of your system with a variety of guard rails, or known rules for bad behavior that a user or operator is easily able to interpret (see Guard Rails). One kind of guard rail for a chatbot could be that the chatbot may never repeat back to the user any of what the user typed. This is a check that may easily be performed at various points in model training and deployment, and testing. While such a guard rail may seem asinine, AI chatbots have failed spectacularly before[1], for this exact reason.

Design Questions

Considerations

Gate-Keeping

AI systems that serve a gating function will naturally become the target of adverse actors—people who attempt to subvert the functionality of the system.

Operators

Human operators that monitor AI systems also need tools to help them make their decisions faster and better.

Sending & Receiving

After receiving information from an AI, users often change their behavior patterns, requiring the AI to adapt to this new behavior.

Cold Start

Bootstrapping your AI system with bad data can hinder future data collection efforts.

Onboarding Dynamics

Onboarding should be considered as a continuous learning process for both your system and your user.

Further Resources

- ORES: Lowering Barriers with Participatory Machine Learning in Wikipedia by Aaron Halfaker & R. Stuart Geiger

Footnotes

Microsoft Silences its New AI Bot Tay on Techcrunch ↩︎