AI as Design Space

When designing a human-centered system, it also helps to step away from the data itself to focus purely on human experience to determine how the system should ‘feel’. There is a broadly open-ended design space for AI systems (see Augmentation), and many designers struggle to frame the problem outside of technical capabilities. You can overcome this by thinking of your AI system as a decision-making system. In general, decision making systems may involve one or more humans, and may not require any AI at all. However, all AI systems are decision-making systems. Examples of decision-making systems are college admissions (for education), government (for policy), diets (for food), and countless others. Framing your system as a decision-making system is extremely powerful—it allows you to imagine a solution without requiring a technical foundation.

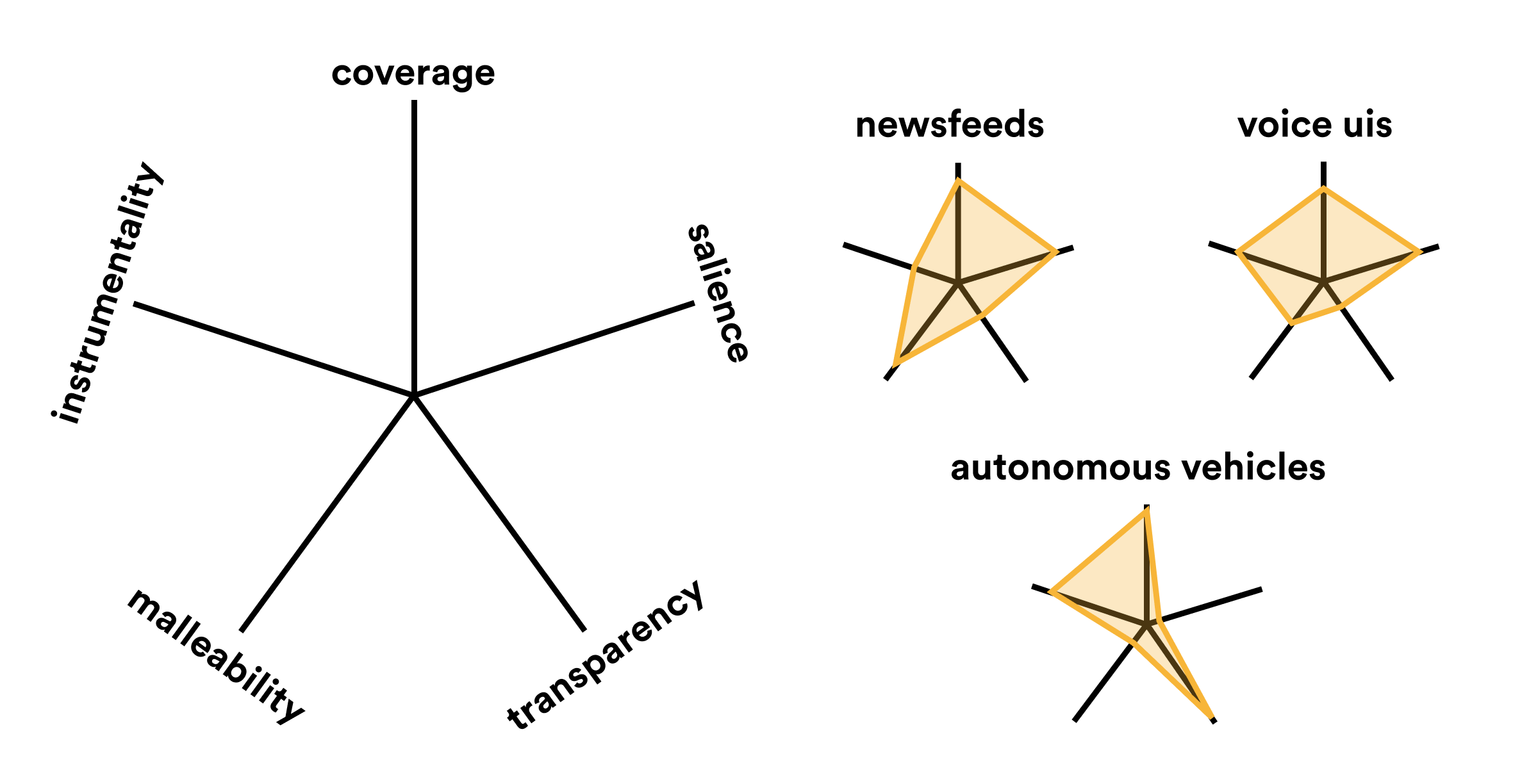

To that end, we have designed a simple chart that can help you define your decision-making system. This diagram is a spider chart with five axes[1]:

Coverage: Which data is needed and how comprehensive should it be to make decisions?

Salience: How surprising, bold, or unexpected should the decisions be?

Transparency: How easily should one understand the decision and its reasoning?

Malleability: How should decisions shift over time, and based on what?

Instrumentality: How specific or task-oriented should the decision be?

These five axes can be plotted out in the form of a radar chart (also sometimes called a star chart or a spider chart) where the point in each axis represents the value of that parameter from ‘none’ to ‘max’. For example, if your system has high coverage (it needs an extensive data set), you should place a dot at the top of the chart on the ‘coverage’ axis such as in the newsfeed example.

Using the chart and the provided examples, try and map out where your desired solution would fit in. Keep in mind that there is no ‘perfect’ answer, and the intent is not to frame an ideal decision-making system as one where all axes are at 100%. In fact, many great AI systems do not require much transparency (see Transparency). A system that is too malleable or salient may undermine the user’s expectations (see Intuition). However, it is important to arrive at a general ‘shape’—think of the diagram more as a conversation starter than a strict plan.

Designing for Human Interaction

Designing for human-AI interaction is a distinct skill from data modeling, almost akin to a different muscle. The decisions made by your system will not always make sense to pose in a direct Q&A format. Nor will it always make sense for the AI to function in a fully ‘autonomous’ way, either. Think of the spell-check tool on your phone’s keyboard: in order to work, it must intelligently infer context and make corrections at the appropriate time. However, as a completely automated tool, often gets things very wrong. Neither a request-response nor an autonomous mode suffices—some kind of hybrid approach is quite needed!

Ultimately, the design of human-AI interaction requires a bit of lateral thinking as the possibilities are near limitless. Users may interact directly with an AI, or the AI may require only partial interaction (setting it up or giving it your initial preferences, say). The AI may quietly assist the user with key metrics, or pipe up to alert the user in the case of issues. The user may interact through the AI without noticing much at all (such as in the case of language translation or scrolling through a news feed). Users may use AI to request a portfolio of solutions, from which the user selects and refines one. Or, the AI could even select from the user’s portfolio of solutions.

Grappling with the number of possible interaction designs can be daunting. However, the key is to continually entertain different possible solutions, from the moment of your first brainstorms all the way to implementation. As you develop new and creative approaches, refer back to the decision-making diagram as an assessment and comparison tool to speak constructively about each solution’s pros and cons.

Footnotes

Our five-sided diagram was partially inspired by the taxonomy of recommender systems presented in this paper ↩︎