Technical Risk

Technology is certainly the most daunting element of AI, as most problems seem confined by what technology can or cannot do. However, by now you should be well-equipped to face this kind of technical risk by developing a strong dataset backed by solid opinions about your AI’s design tradeoffs. While you must obviously choose a technology, the later steps in this handbook should also equip you to iterate and improve on this so that your initial choice will not come back to bite you.

Choosing Domains

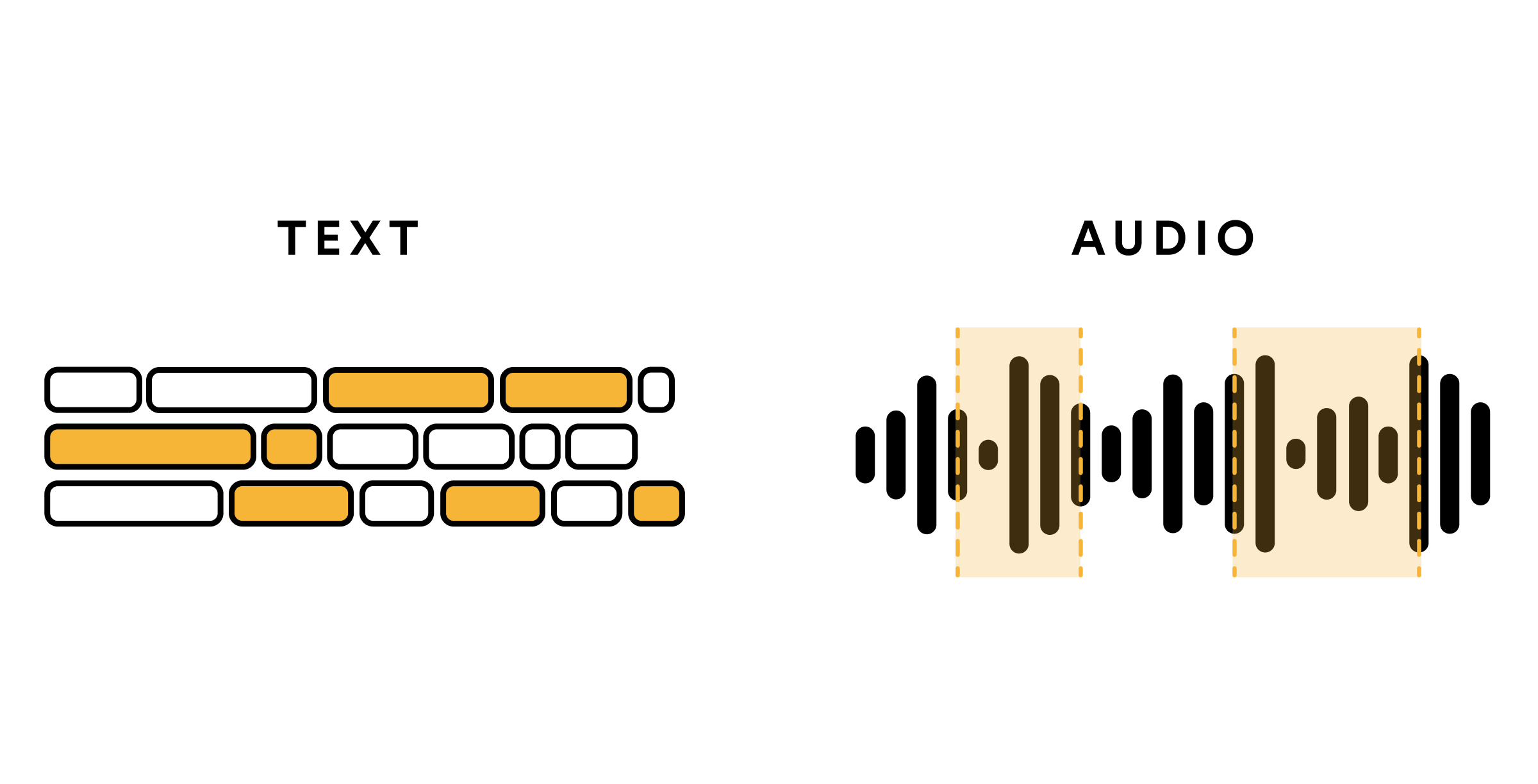

So how does one go about this special step of selecting and implementing the AI algorithm? In fact, your choice of algorithm actually matters less than you may expect! More important than selecting an algorithm is selecting an algorithm from the correct domain. In this case, domain refers to the type of the data you are processing (tabular, image, audio, text, etc.), as most algorithms are tailored to exploit implicit properties of their domain. For example, a Transformer model exploits the linearity of language, where relationships between words stretch across the text. A Decision Tree exploits the relationships within tabular data, where multi-level correlations between data points matter most.

Resources on Algorithm Selection

As long as you select an algorithm from the right domain, most differences between individual algorithms should not matter greatly. Engineers might scoff at this, but our experience supports the idea of keeping it simple when it comes to algorithms. The simpler the algorithm, the more likely you are to discover problems in your data rather than problems in the algorithm itself. Simpler, more general algorithms help you validate your hypotheses more quickly (whether or not a given dataset can produce a good model). Finally, a simpler algorithm allows you to put your model into practice more quickly, allowing you gather user feedback before you waste time optimizing and fine tuning a broken system. In many cases, users will forgive an imperfect system, but users will not forgive a system that lacks usability.

There are cases where a complex algorithm performs better than a simple one, and we recognize the value of certain specific techniques based on the task. However, we make the claim that simpler is better in most cases. This is not only pragmatic, but also allows your team to focus on the most important aspect of your AI product—its impact on humans.

Errors (or, why wrong isn’t always wrong)

Instead of attempting to minimize errors at all costs, it is crucial to recognize that well-designed AIs have intentionally chosen when to be wrong, as well as how wrong to be. For more context on the general types of errors, see Errata. However, more than accuracy itself, consider the risk of negative outcomes as the key indicator of success. This indicator cannot necessarily exist in the technology itself, as it requires a holistic perspective on the design challenge. Yet this question has myriad implications for the choice in technologies as well as the choice of parameters for those technologies.

One of these choices is between recall and precision. Recall refers to the percentage of occurrences of the phenomenon you predicted that you caught, while precision refers to the percenage of your predictions that were correct. In most cases, increasing one of these comes at the sacrifice of the other, and no optimal ‘compromise’ exists. You must determine in your own particular situation whether it is less risky to overpredict but catch all occurrences (high recall & low precision) or whether it is less risky to underpredict and deal with fewer mistakes (low recall & high precision). Situations where overprediction is better generally include: medical diagnosis, predicting costly outcomes, and fraud detection. Situations where underprediction is better generally include: hiring, buying real estate, and credit card approvals. Finally, keep in mind that in some cases, you may want a mix of precision & recall, especially when you want your solution to be perceived as ‘generally’ accurate rather than risk-averse.

Feedback and Self-Improvement

A common refrain about AI is that it can improve itself over time by feeding its own errors back into the system. In unpacking this belief, it first helps to recognize that even non-AI systems are ‘self-improving’ if you include the fact that designers learn from the system’s mistakes and make improvements. Of course, this is not the kind of automated self-improvement that most of us think of with AI. Yet the majority of AI in use today uses precisely this idea—data that is collected via the AI gets sent back to human reviewers who re-label the data.

Next, consider the challenges that an automatically self-improving system would face to the designer. The AI would become non-stationary, and perhaps unpredictable in its behavior (see Dynamics). If the system stopped making useful recommendations, it would be harder to know why. For this reason, many companies opt for a form of manual self-improvement, where every new ‘version’ of the AI is trained on recent data and passed through a rigorous testing phase (this is called human-in-the-loop design).

In all, our recommendation is to stay away from the logic of an AI improving itself over time. Stay away from using this justification in your brainstorming, as it minimizes dissenting voices by playing the ‘technology’ card. If your AI cannot provide value immediately, you probably need to take a fresh look at your design and adjust to user feedback, which we discuss in the next section.