The Real World

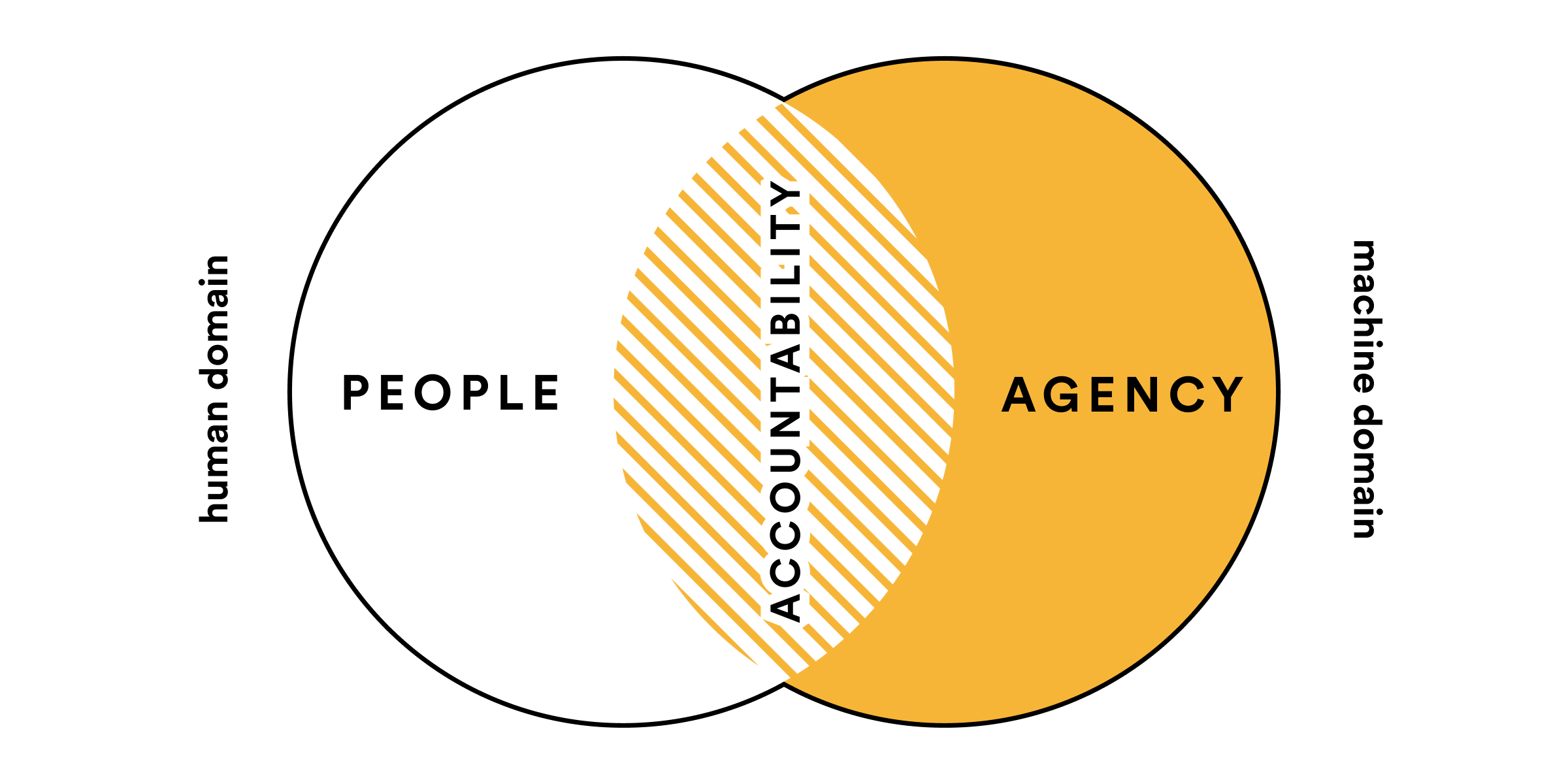

AI systems still fail in the real world when they confront human systems that conflict. This is typically a good thing—human systems exist in management, regulation, privacy, accountability, and almost everywhere in day-to-day life in order to lower risks and improve society. This naturally frustrates ambitious AI creators, who see the opportunity to overcome inefficiencies and optimize outcomes. However, the solution is not to avoid human systems but to work alongside or within them. A human-centered AI system should function like a good team member—helping align values among many stakeholders. Predictive systems often fall within the trap of attempting to sidestep existing human systems. A predictive factory optimization system has to align values with the factory’s manager. Otherwise, the manager might just shut off the system when its results don’t match their expectations. This value misalignment problem has two dimensions, which we detail below: agency and accountability.

Agency

Intelligent machine systems naturally require humans to trust them—a sufficiently intelligent technology may have to make decisions that humans don’t understand. When humans bestow trust into an AI system, that system gains agency, or the ability to make decisions on its own. For example, smart robotics systems can plan movements that were not explicitly designed by a person. It is tempting to assume that AIs must have maximum agency to be successful. However, this is not true in the real world. AI systems may provide immense value without any agency at all, just by becoming ‘decision support systems’ that only provide information to a human decision maker. Decision support systems are widely pervasive across industries such as logistics and manufacturing. In fact, companies may currently suffer from too much information from decision support systems rather than too little. Designing a decision support systems requires careful study of human behavior and human responses to external information. Too much information, or information presented with a misleading confidence interval can hurt rather than help.

Another misconception often heard is that agency equates to full autonomy. Instead, agency is a spectrum that may involve oversight or executive control from humans. For example, an automated trading platform for finance may have the ability to make trades, yet humans (trading managers, regulators, etc.) might still require certain guarantees (see Guard Rails). This is a form of partial agency where the most successful AIs are capable of working within human systems rather than vice-versa. Thinking of agency as a spectrum can broaden the design space of possible solutions.

Accountability

The flip-side to agency is accountability. An AI is accountable if its decisions can be inspected or verified in some fashion. An accountable AI is not necessarily one with an ‘explainability module’ or other method of inspecting model parameters (see Transparency). An accountable AI may not be fully explainable, but may simply exist to distill the data into a consumable fashion. Sometimes, an AI can become more accountable simply by communicating its input dataset more clearly.

In order for machine intelligence to gain adoption from mainstream industries and businesses, systems must be accountable in both normal as well as exceptional situations. AI systems have known limitations, including imperfect accuracy, so gaining human trust requires thoughtful and careful design.

Ethics

Several have asked us why ethics is not a ‘ninth’ principle of Lingua Franca. We feel that ethics is not a separate principle of AI, nor a unique aspect of AI at all. Any technology or tool should be designed under an ethical framework—AI is not a special case of ethics (barring certain questions of machine consciousness). We have seen the emergence of ‘algorithmic fairness’ with some skepticism. Recently, there has been a development of algorithms that purport to encode ethics and fairness, automating systems to be just and impartial[1]. Yet, humans don’t agree completely on a shared ethical framework, so whose ethical framework will the AI listen to? We cannot find the ‘average’ of different belief systems. The average of a vegetarian and a meat-eating diet is not a diet that eats meat 50% of the time.

As an alternative to these ‘ethics automation’ frameworks, we propose a framework built on shared agency and accountability, with user choice and intervention as core features. Ethics in AI should not be so convoluted as to require a separate profession; ethics should be distributed across an organization, flowing through and between individuals so that conversations of design naturally arrive at ethical questions. More crucially, everyone should have the tools to speak up and suggest improvements, so that no one person or set of people hold the keys to building ethical technology.

Footnotes

While we disagree with the assumptions behind algorithmic fairness, in the interest of furthering discussion, we want to point to a core research paper in this domain so readers can make their own judgment: Fairness Through Awareness by Dwork, et al. In contrast, a recent research paper challenges the theoretical underpinnings of algorithmic fairness: Algorithmic Fairness from a Non-ideal Perspective by Sina Fazelpour & Zachary C. Lipton. ↩︎